Inference

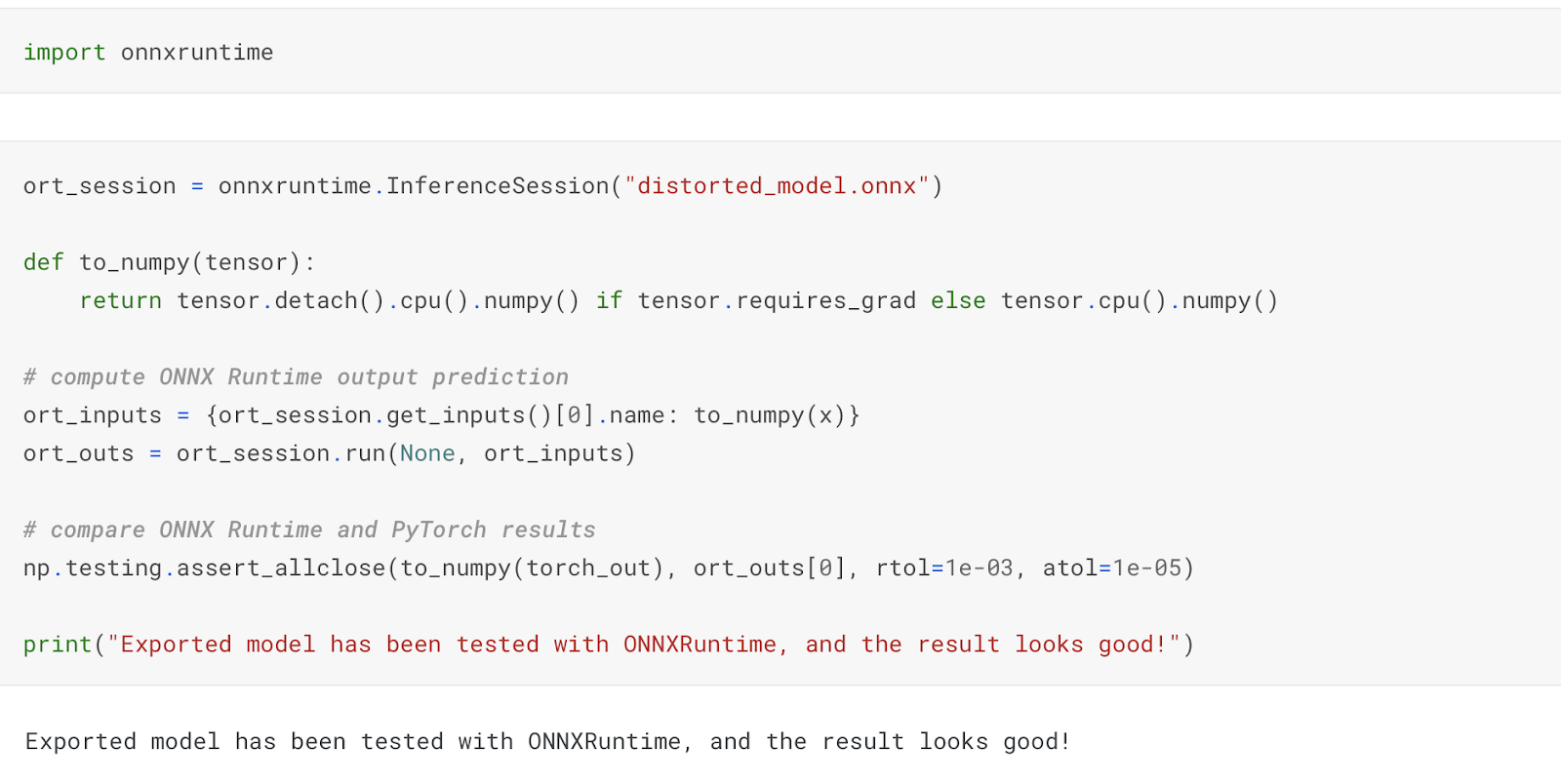

This week my main goal was to develop an inference pipeline for segmentation model. To make the inference faster, I have transferred model to ONNX (my plan is to transfer it to TensorRT next). Here is the notebook with an algorithm to transfer a model from pyTorch to ONNX.

The resulting model works as good as the original model does (there is no significant difference in their predictions):

|

| Results comparison |

Function based inference

In case if the model is to be run inside another script / application, I have put the model to a python module. Now this module can be used inside any other module and/or function.

Also, the ONNX pipeline from the module can be used separately in any part of service or/and program. It makes the recognition more flexible.

Script based inference

Also I have developed a script, which accepts a directory with input images and constructs a folder with segmentation masks for this folder. I believe that such a script could be useful, if it is needed to mark out some historical data.

Comments

Post a Comment