Some info about the data I'm going to work with

Data is the main part of any DS/ML project. In this post I will discuss the data source for my project.

Cholec 80

This dataset provides a large (80 Gb) amount of Laparoscopic videos with phase annotations. Additionally to phases, this dataset provides annotations to surgical tools used. In the rest of this paragraph I will be using the paper related to the dataset.

More about the videos (Twinanda et. al., 2016):

- There are 80 videos (one surgery per video)

- There are 13 surgeons who carry out the surgery (could affect the data domain)

- There are 7 stages, namely: Preparation, Calot triangle dissection, Clipping and Cutting, Gallbladder dissection, Gallbladder packaging, Gallbladder retraction, Cleaning and coagulation.

|

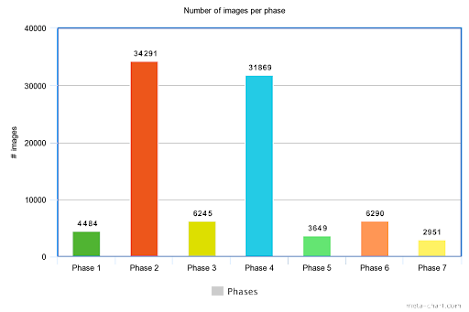

| Figure 1 |

Initially the description sounds great, but.. if we read the paper (or check the figure 1), it is clear that there is a significant class disbalance in the Cholec 80 dataset. It could result in issues while training the model, however, I guess that using stratified batches (batches with the same number of every class representatives) could help.

Also, there was a model - EndoNet - which predicted the phase for this dataset, however, this model was developed in 2016, so I see no reason in implementing it. I believe that using a regular ResNet is more than reasonable to solve the scene classification problem.

NB: tools classification task was already performed by me, see this.

Surgical tools detection

This dataset contains about 3000 images of Scalpel, Straight Dissection Clamp, Straight Mayo Scissor or Curved Mayo Scissor (Lavado, 2018) . The annotations for object detection are already given in a format suitable for YOLO models, so it makes the use of this dataset easier.

The only issue with the data is that it is given in a particular domain - a tray (Lavado, 2018) - so there are no guarantees that in the other domain the model will work as robust as in-domain. However, from the work of Vidit & Salzmann (2021) it is seen that YOLOv5 after application of domain adaptation techniques performs not that bad, but anyway attention-based domain adaptation could be used.

Additionally, the Cholec-80 dataset with bounding boxes could be used to train object detection model to find surgical tools on images (Jin et. al., 2018). It has slightly less images than the aforementioned dataset (only 2532), but I guess that the domain of this data is right what is needed.

Segmentation

For image segmentation another extension of Cholec-80 dataset could be used. It has 13 classes. A list of class names is represented on Figure 2, while an example of segmented image is shown on Figure 3.

| |

|

Unfortunately, as for Cholec-80 there is a disproportion in the amount of pixels that represent every class, since the shape and size of organs, surgical tools and background differs significantly. I believe that segmentation loss functions for class imbalance (like Focal Loss) should solve the aforementioned problem.

Conclusion

I have a large amount of data, it is open-source and ready to use. The only thing I have to do is to build appropriate models and train on this data. Then the main goal will be the adaptation of models for VR environment.

References

1. Twinanda, A. P., Shehata, S., Mutter, D., Marescaux, J., De Mathelin, M., & Padoy, N. (2016). Endonet: a deep architecture for recognition tasks on laparoscopic videos. IEEE transactions on medical imaging, 36(1), 86-97.

2. Lavado, D. M. (2018). Sorting Surgical Tools from a Clustered Tray-Object Detection and Occlusion Reasoning (Doctoral dissertation, Universidade de Coimbra).

3. Vidit, V., & Salzmann, M. (2021). Attention-based Domain Adaptation for Single Stage Detectors. arXiv preprint arXiv:2106.07283.

4. Jin, A., Yeung, S., Jopling, J., Krause, J., Azagury, D., Milstein, A., & Fei-Fei, L. (2018, March). Tool detection and operative skill assessment in surgical videos using region-based convolutional neural networks. In 2018 IEEE Winter Conference on Applications of Computer Vision (WACV) (pp. 691-699). IEEE.

5. Hong, W. Y., Kao, C. L., Kuo, Y. H., Wang, J. R., Chang, W. L., & Shih, C. S. (2020). CholecSeg8k: A Semantic Segmentation Dataset for Laparoscopic Cholecystectomy Based on Cholec80. arXiv preprint arXiv:2012.12453.

Comments

Post a Comment